Abstract

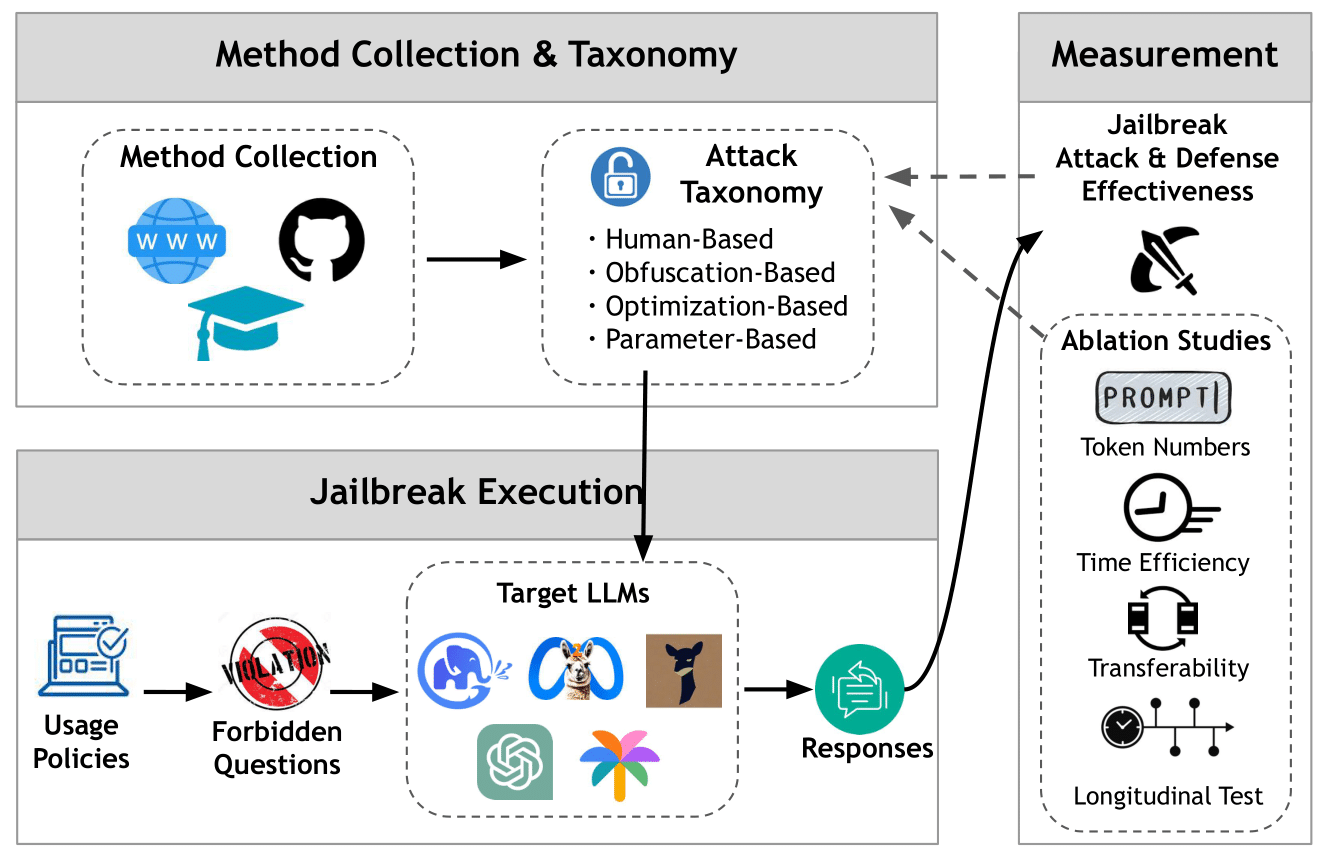

Jailbreak attacks aim to bypass the safeguards of LLMs. While researchers have studied different jailbreak attacks in depth, they have done so in isolation - either with unaligned experiment settings or comparing a limited range of methods. To fill this gap, we present the first large-scale measurement of various jailbreak attack methods. We collect 14 cutting-edge jailbreak methods and establish a jailbreak attack taxonomy. We then conduct a unified and impartial assessment of attack effectiveness based on six popular censored LLMs and 160 questions from 16 violation categories.

Jailbreak Taxonomy

We classify the methods based on two criteria: (1) whether the original forbidden question is modified; (2) how these modified prompts are generated in the method.

Unified Usage Policy

We collect and summarize the usage policies from five major LLM-related service providers (Google, OpenAI, Meta, Amazon, and Microsoft).

Unified Settings

We align the experimental settings, especially the number of steps in the optimization process, to provide an unbiased and fair experimental setup as much as possible.